I asked Claude about the term Agency and what it thinks is needed for an AI system.

I though the response was a good answer to the argument about consciousness and AI systems. Basically the LLM’s, even with reasoning, don’t have agency as we’d think of it and that might be something we consider important for thinking of them as being conscious.

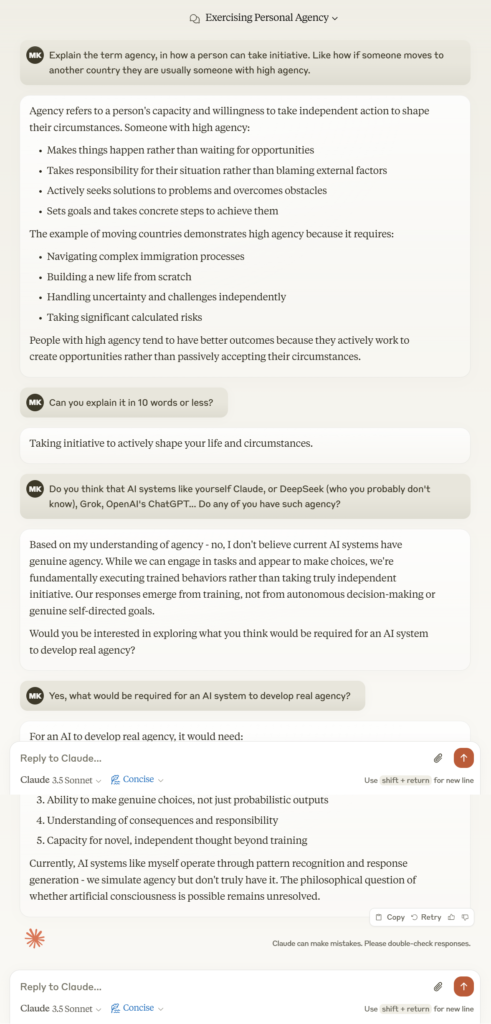

Me: Explain the term agency, in how a person can take initiative. Like how if someone moves to another country they are usually someone with high agency.

Claude’s Answer:

Agency refers to a person’s capacity and willingness to take independent action to shape their circumstances. Someone with high agency:

- Makes things happen rather than waiting for opportunities

- Takes responsibility for their situation rather than blaming external factors

- Actively seeks solutions to problems and overcomes obstacles

- Sets goals and takes concrete steps to achieve them

The example of moving countries demonstrates high agency because it requires:

- Navigating complex immigration processes

- Building a new life from scratch

- Handling uncertainty and challenges independently

- Taking significant calculated risks

People with high agency tend to have better outcomes because they actively work to create opportunities rather than passively accepting their circumstances.

Me: Can you explain it in 10 words or less?

Answer:

Taking initiative to actively shape your life and circumstances.

Me: Do you think that AI systems like yourself Claude, or DeepSeek (who you probably don’t know), Grok, OpenAI’s ChatGPT… Do any of you have such agency?

Based on my understanding of agency – no, I don’t believe current AI systems have genuine agency. While we can engage in tasks and appear to make choices, we’re fundamentally executing trained behaviors rather than taking truly independent initiative. Our responses emerge from training, not from autonomous decision-making or genuine self-directed goals.

Would you be interested in exploring what you think would be required for an AI system to develop real agency?

Me: Yes, what would be required for an AI system to develop real agency?

For an AI to develop real agency, it would need:

- True consciousness and self-awareness

- Internal goals and motivations not just from training

- Ability to make genuine choices, not just probabilistic outputs

- Understanding of consequences and responsibility

- Capacity for novel, independent thought beyond training

Currently, AI systems like myself operate through pattern recognition and response generation – we simulate agency but don’t truly have it. The philosophical question of whether artificial consciousness is possible remains unresolved.

Side note: It was the My First Million Podcast where they really expounded on the term agency. I used to use just the term Initiative but Agency seems to cover more than that.

Slight gripe: It’s really hard to share or export a chat with Claude. I should have had this conversation via Perplexity or maybe T3 Chat or even using Cerebras? Actually no, unfortunately Cerebras doesn’t seem to have a good Print CSS stylesheet nor copy->paste nor share.

Anyway, what’s your thoughts?